Modelling with Diffsol

Ordinary Differential Equations (ODEs) are a powerful tool for modelling a wide range of physical systems. Unlike purely data-driven models, ODEs are based on the underlying physics, biology, or chemistry of the system being modelled. This makes them particularly useful for predicting the behaviour of a system under conditions that have not been observed. In this section, we will introduce the basics of ODE modelling, and illustrate their use with a series of examples written using the Diffsol crate.

The topics covered in this section are:

-

First Order ODEs: First order ODEs are the simplest type of ODE. Any ODE system can be written as a set of first order ODEs, so libraries like Diffsol are designed such that the user provides their equations in this form.

- Example: Population Dynamics: A simple example of a first order ODE system, modelling the interaction of predator and prey populations.

-

Higher Order ODEs: Higher order ODEs are equations that involve derivatives of order greater than one. These can be converted to a system of first order ODEs, which is the form that Diffsol expects.

- Example: Spring-mass systems: A simple example of a higher order ODE system, modelling the motion of a damped spring-mass system.

-

Discrete Events: Discrete events are events that occur at specific times or when the system is in a particular state, rather than continuously. These can be modelled by treating the events as changes in the ODE system’s state. Diffsol provides an API to detect and handle these events.

- Example: Compartmental models of Drug Delivery: Pharmacokinetic models describe how a drug is absorbed, distributed, metabolised, and excreted by the body. They are a common example of systems with discrete events, as the drug is often administered at discrete times.

- Example: Bouncing Ball: A simple example of a system where the discrete event occurs when the ball hits the ground, instead of at a specific time.

-

DAEs via the Mass Matrix: Differential Algebraic Equations (DAEs) are a generalisation of ODEs that include algebraic equations as well as differential equations. Diffsol can solve DAEs by treating them as ODEs with a mass matrix. This section explains how to use the mass matrix to solve DAEs.

- Example: Electrical Circuits: Electrical circuits are a common example of DAEs, here we will model a simple low-pass LRC filter circuit.

-

PDEs: Partial Differential Equations (PDEs) are a generalisation of ODEs that involve derivatives with respect to more than one variable (e.g. a spatial variable). Diffsol can be used to solver PDEs using the method of lines, where the spatial derivatives are discretised to form a system of ODEs.

- Example: Heat Equation: The heat equation describes how heat diffuses in a domain over time. We will solve the heat equation in a 1D domain with Dirichlet boundary conditions.

- Example: Physics-based Battery Simulation: A more complex example of a PDE system, modelling the charge and discharge of a lithium-ion battery. For this example we will use the PyBaMM library to form the ODE system, and Diffsol to solve it.

-

Forward Sensitivity Analysis: Sensitivity analysis is a technique used to determine how the output of a model changes with respect to changes in the model parameters. Forward sensitivity analysis calculates the sensitivity of the model output with respect to the parameters by solving the ODE system and the sensitivity equations simultaneously.

- Example: Fitting a predator-prey model to data: An example of fitting a predator-prey model to synthetic data using forward sensitivity analysis.

-

Backwards Sensitivity Analysis: Backwards sensitivity analysis calculates the sensitivity of a loss function with respect to the parameters by first solving the ODE system and then the adjoint equations backwards in time. This is useful if your model has a high number of parameters, as it can be more efficient than forward sensitivity analysis.

- Example: Fitting a spring-mass model to data: An example of fitting a spring-mass model to synthetic data using backwards sensitivity analysis.

- Example: Weather prediction using neural ODEs: An example of fitting a neural ODE to weather data using backwards sensitivity analysis.

Explicit First Order ODEs

Ordinary Differential Equations (ODEs) are often called rate equations because they describe how the rate of change of a system depends on its current state. For example, lets assume we wish to model the growth of a population of cells within a petri dish. We could define the state of the system as the concentration of cells in the dish, and assign this state to a variable \(c\). The rate of change of the system would then be the rate at which the concentration of cells changes with time, which we could denote as \(\frac{dc}{dt}\). We know that our cells will grow at a rate proportional to the current concentration of cells, so this can be written as:

\[ \frac{dc}{dt} = k c \]

where \(k\) is a constant that describes the growth rate of the cells. This is a first order ODE, because it involves only the first derivative of the state variable \(c\) with respect to time.

We can extend this further to solve multiple equations simultaineously, in order to model the rate of change of more than one quantity. For example, say we had two populations of cells in the same dish that grow with different rates. We could define the state of the system as the concentrations of the two cell populations, and assign these states to variables \(c_1\) and \(c_2\). could then write down both equations as:

\[ \begin{align*} \frac{dc_1}{dt} &= k_1 c_1 \\ \frac{dc_2}{dt} &= k_2 c_2 \end{align*} \]

and then combine them in a vector form as:

\[ \begin{bmatrix} \frac{dc_1}{dt} \\ \frac{dc_2}{dt} \end{bmatrix} = \begin{bmatrix} k_1 c_1 \\ k_2 c_2 \end{bmatrix} \]

By defining a new vector of state variables \(\mathbf{y} = [c_1, c_2]\) and a vector valued function \(\mathbf{f}(\mathbf{y}, t) = \begin{bmatrix} k_1 c_1 \\ k_2 c_2 \end{bmatrix}\), we are left with the standard form of a explicit first order ODE system:

\[ \frac{d\mathbf{y}}{dt} = \mathbf{f}(\mathbf{y}, t) \]

This is an explicit equation for the derivative of the state, \(\frac{d\mathbf{y}}{dt}\), as a function of the state variables \(\mathbf{y}\) and of time \(t\).

We need one more piece of information to solve this system of ODEs: the initial conditions for the populations at time \(t = 0\). For example, if we started with a concentration of 10 for the first population and 5 for the second population, we would write:

\[ \mathbf{y}(0) = \begin{bmatrix} 10 \\ 5 \end{bmatrix} \]

Many ODE solver libraries, like Diffsol, require users to provide their ODEs in the form of a set of explicit first order ODEs. Given both the system of ODEs and the initial conditions, the solver can then integrate the equations forward in time to find the solution \(\mathbf{y}(t)\). This is the general process for solving ODEs, so it is important to know how to translate your problem into a set of first order ODEs, and thus to the general form of a explicit first order ODE system shown above. In the next two sections, we will look at an example of a first order ODE system in the area of population dynamics, and then solve it using Diffsol.

Population Dynamics - Predator-Prey Model

In this example, we will model the population dynamics of a predator-prey system using a set of first order ODEs. The Lotka-Volterra equations are a classic example of a predator-prey model, and describe the interactions between two species in an ecosystem. The first species is a prey species, which we will call \(x\), and the second species is a predator species, which we will call \(y\).

The rate of change of the prey population is governed by two terms: growth and predation. The growth term represents the natural increase in the prey population in the absence of predators, and is proportional to the current population of prey. The predation term represents the rate at which the predators consume the prey, and is proportional to the product of the prey and predator populations. The rate of change of the prey population can be written as:

\[ \frac{dx}{dt} = a x - b x y \]

where \(a\) is the growth rate of the prey population, and \(b\) is the predation rate.

The rate of change of the predator population is also governed by two terms: death and growth. The death term represents the natural decrease in the predator population in the absence of prey, and is proportional to the current population of predators. The growth term represents the rate at which the predators reproduce, and is proportional to the product of the prey and predator populations, since the predators need to consume the prey to reproduce. The rate of change of the predator population can be written as:

\[ \frac{dy}{dt} = c x y - d y \]

where \(c\) is the reproduction rate of the predators, and \(d\) is the death rate.

The Lotka-Volterra equations are a simple model of predator-prey dynamics, and make several assumptions that may not hold in real ecosystems. For example, the model assumes that the prey population grows exponentially in the absence of predators, that the predator population decreases linearly in the absence of prey, and that the spatial distribution of the species has no effect. Despite these simplifications, the Lotka-Volterra equations capture some of the essential features of predator-prey interactions, such as oscillations in the populations and the dependence of each species on the other. When modelling with ODEs, it is important to consider the simplest model that captures the behaviour of interest, and to be aware of the assumptions that underlie the model.

Putting the two equations together, we have a system of two first order ODEs:

\[ \frac{dx}{dt} = a x - b x y \\ \frac{dy}{dt} = c x y - d y \]

which can be written in vector form as:

\[ \begin{bmatrix} \frac{dx}{dt} \\ \frac{dy}{dt} \end{bmatrix} = \begin{bmatrix} a x - b x y \\ c x y - d y \end{bmatrix} \]

or in the general form of a first order ODE system:

\[ \frac{d\mathbf{y}}{dt} = \mathbf{f}(\mathbf{y}, t) \]

where

\[\mathbf{y} = \begin{bmatrix} x \\ y \end{bmatrix} \]

and

\[\mathbf{f}(\mathbf{y}, t) = \begin{bmatrix} a x - b x y \\ c x y - d y \end{bmatrix}\]

We also have initial conditions for the populations at time \(t = 0\). We can set both populations to 1 at the start like so:

\[ \mathbf{y}(0) = \begin{bmatrix} 1 \\ 1 \end{bmatrix} \]

Let’s solve this system of ODEs using the Diffsol crate. We will use the DiffSL domain-specific language to specify the problem. We could have also used Rust closures, but this allows us to illustrate the modelling process with a minimum of Rust syntax.

use diffsol::{

CraneliftJitModule, MatrixCommon, NalgebraVec, OdeBuilder, OdeEquations, OdeSolverMethod,

Vector,

};

use plotly::{common::Mode, layout::Axis, layout::Layout, Plot, Scatter};

use std::fs;

type M = diffsol::NalgebraMat<f64>;

type LS = diffsol::NalgebraLU<f64>;

type CG = CraneliftJitModule;

fn main() {

solve();

phase_plane();

}

fn solve() {

let problem = OdeBuilder::<M>::new()

.build_from_diffsl::<CG>(

"

a { 2.0/3.0 } b { 4.0/3.0 } c { 1.0 } d { 1.0 }

u_i {

y1 = 1,

y2 = 1,

}

F_i {

a * y1 - b * y1 * y2,

c * y1 * y2 - d * y2,

}

",

)

.unwrap();

let mut solver = problem.bdf::<LS>().unwrap();

let (ys, ts) = solver.solve(40.0).unwrap();

let prey: Vec<_> = ys.inner().row(0).into_iter().copied().collect();

let predator: Vec<_> = ys.inner().row(1).into_iter().copied().collect();

let time: Vec<_> = ts.into_iter().collect();

let prey = Scatter::new(time.clone(), prey)

.mode(Mode::Lines)

.name("Prey");

let predator = Scatter::new(time, predator)

.mode(Mode::Lines)

.name("Predator");

let mut plot = Plot::new();

plot.add_trace(prey);

plot.add_trace(predator);

let layout = Layout::new()

.x_axis(Axis::new().title("t"))

.y_axis(Axis::new().title("population"));

plot.set_layout(layout);

let plot_html = plot.to_inline_html(Some("prey-predator"));

fs::write("book/src/primer/images/prey-predator.html", plot_html)

.expect("Unable to write file");

}A phase plane plot of the predator-prey system is a useful visualisation of the dynamics of the system. This plot shows the prey population on the x-axis and the predator population on the y-axis. Trajectories in the phase plane represent the evolution of the populations over time. Lets reframe the equations to introduce a new parameter \(y_0\) which is the initial predator and prey population. We can then plot the phase plane for different values of \(y_0\) to see how the system behaves for different initial conditions.

Our initial conditions are now:

\[ \mathbf{y}(0) = \begin{bmatrix} y_0 \\ y_0 \end{bmatrix} \]

so we can solve this system for different values of \(y_0\) and plot the phase plane for each case. We will use similar code as above, but we will introduce our new parameter and loop over different values of \(y_0\)

fn phase_plane() {

let mut problem = OdeBuilder::<M>::new()

.p([1.0])

.build_from_diffsl::<CG>(

"

in { y0 = 1.0 }

a { 2.0/3.0 } b { 4.0/3.0 } c { 1.0 } d { 1.0 }

u_i {

y1 = y0,

y2 = y0,

}

F_i {

a * y1 - b * y1 * y2,

c * y1 * y2 - d * y2,

}

",

)

.unwrap();

let mut plot = Plot::new();

for y0 in (1..6).map(f64::from) {

let p = NalgebraVec::from_element(1, y0, *problem.context());

problem.eqn_mut().set_params(&p);

let mut solver = problem.bdf::<LS>().unwrap();

let (ys, _ts) = solver.solve(40.0).unwrap();

let prey: Vec<_> = ys.inner().row(0).into_iter().copied().collect();

let predator: Vec<_> = ys.inner().row(1).into_iter().copied().collect();

let phase = Scatter::new(prey, predator)

.mode(Mode::Lines)

.name(format!("y0 = {y0}"));

plot.add_trace(phase);

}

let layout = Layout::new()

.x_axis(Axis::new().title("x"))

.y_axis(Axis::new().title("y"));

plot.set_layout(layout);

let plot_html = plot.to_inline_html(Some("prey-predator2"));

fs::write("book/src/primer/images/prey-predator2.html", plot_html)

.expect("Unable to write file");

}Higher Order ODEs

The order of an ODE is the highest derivative that appears in the equation. So far, we have only looked at first order ODEs, which involve only the first derivative of the state variable with respect to time. However, many physical systems are described by higher order ODEs, which involve second or higher derivatives of the state variable. A simple example of a second order ODE is the motion of a mass under the influence of gravity. The equation of motion for the mass can be written as:

\[ \frac{d^2x}{dt^2} = -g \]

where \(x\) is the position of the mass, \(t\) is time, and \(g\) is the acceleration due to gravity. This is a second order ODE because it involves the second derivative of the position with respect to time.

Higher order ODEs can always be rewritten as a system of first order ODEs by introducing new variables. For example, we can rewrite the second order ODE above as a system of two first order ODEs by introducing a new variable for the velocity of the mass:

\[ \begin{align*} \frac{dx}{dt} &= v \\ \frac{dv}{dt} &= -g \end{align*} \]

where \(v = \frac{dx}{dt}\) is the velocity of the mass. This is a system of two first order ODEs, which can be written in vector form as:

\[ \frac{d\mathbf{y}}{dt} = \mathbf{f}(\mathbf{y}, t) \]

where

\[ \mathbf{y} = \begin{bmatrix} x \\ v \end{bmatrix} \]

and

\[ \mathbf{f}(\mathbf{y}, t) = \begin{bmatrix} v \\ -g \end{bmatrix} \]

In the next section, we’ll look at another example of a higher order ODE system: the spring-mass system, and solve this using Diffsol.

Example: Spring-mass systems

We will model a damped spring-mass system using a second order ODE. The system consists of a mass \(m\) attached to a spring with spring constant \(k\), and a damping force proportional to the velocity of the mass with damping coefficient \(c\).

The equation of motion for the mass can be written as:

\[ m \frac{d^2x}{dt^2} = -k x - c \frac{dx}{dt} \]

where \(x\) is the position of the mass, \(t\) is time, and the negative sign on the right hand side indicates that the spring force and damping force act in the opposite direction to the displacement of the mass.

We can convert this to a system of two first order ODEs by introducing a new variable for the velocity of the mass:

\[ \begin{align*} \frac{dx}{dt} &= v \\ \frac{dv}{dt} &= -\frac{k}{m} x - \frac{c}{m} v \end{align*} \]

where \(v = \frac{dx}{dt}\) is the velocity of the mass.

We can solve this system of ODEs using Diffsol with the following code:

use diffsol::{CraneliftJitModule, MatrixCommon, OdeBuilder, OdeSolverMethod};

use plotly::{common::Mode, layout::Axis, layout::Layout, Plot, Scatter};

use std::fs;

type M = diffsol::NalgebraMat<f64>;

type CG = CraneliftJitModule;

type LS = diffsol::NalgebraLU<f64>;

fn main() {

let problem = OdeBuilder::<M>::new()

.build_from_diffsl::<CG>(

"

k { 1.0 } m { 1.0 } c { 0.1 }

u_i {

x = 1,

v = 0,

}

F_i {

v,

-k/m * x - c/m * v,

}

",

)

.unwrap();

let mut solver = problem.bdf::<LS>().unwrap();

let (ys, ts) = solver.solve(40.0).unwrap();

let x: Vec<_> = ys.inner().row(0).into_iter().copied().collect();

let time: Vec<_> = ts.into_iter().collect();

let x_line = Scatter::new(time.clone(), x).mode(Mode::Lines);

let mut plot = Plot::new();

plot.add_trace(x_line);

let layout = Layout::new()

.x_axis(Axis::new().title("t"))

.y_axis(Axis::new().title("x"));

plot.set_layout(layout);

let plot_html = plot.to_inline_html(Some("sping-mass-system"));

fs::write("../src/primer/images/spring-mass-system.html", plot_html)

.expect("Unable to write file");

}Discrete Events

ODEs describe the continuous evolution of a system over time, but many systems also involve discrete events that occur at specific times. For example, in a compartmental model of drug delivery, the administration of a drug is a discrete event that occurs at a specific time. In a bouncing ball model, the collision of the ball with the ground is a discrete event that changes the state of the system. It is normally difficult to model these events using ODEs alone, as they require a different approach to handle the discontinuities in the system. While we can represent discrete events mathematically using delta functions, many ODE solvers are not designed to handle discontinuities, and may produce inaccurate results or fail to converge during the integration.

Diffsol provides a way to model discrete events in a system of ODEs by allowing users to manipulate the internal state of each solver during the time-stepping. Each solver has an internal state that holds information such as the current time \(t\), the current state of the system \(\mathbf{y}\), and other solver-specific information. When a discrete event occurs, the user can update the internal state of the solver to reflect the change in the system, and then continue the integration of the ODE as normal.

Diffsol also provides a way to stop the integration of the ODEs, either at a specific time or when a specific condition is met. This can be useful for modelling systems with discrete events, as it allows the user to control the integration of the ODEs and to handle the events in a flexible way.

The Solving the Problem and Root Finding sections provides an introduction to the API for solving ODEs and detecting events with Diffsol. In the next two sections, we will look at two examples of systems with discrete events: compartmental models of drug delivery and bouncing ball models, and solve them using Diffsol using this API.

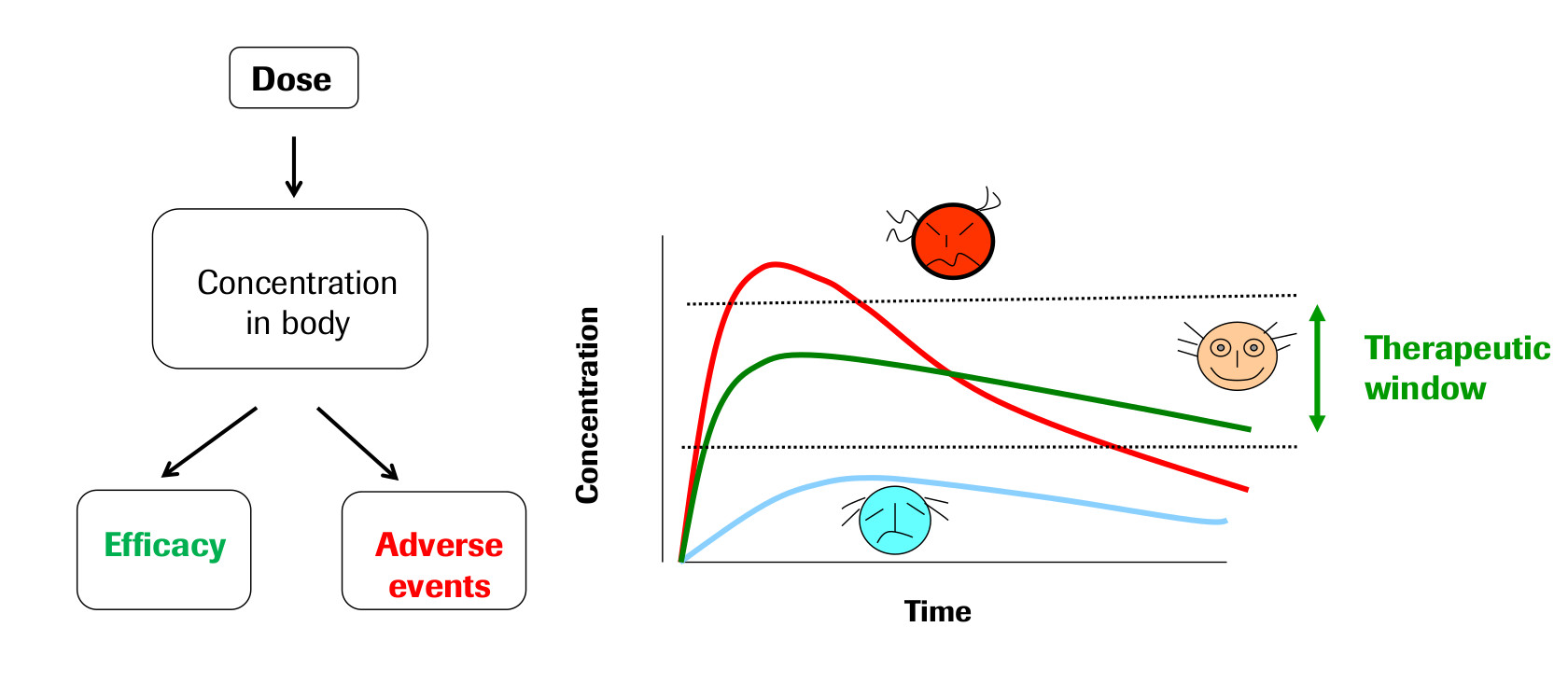

Example: Compartmental models of Drug Delivery

The field of Pharmacokinetics (PK) provides a quantitative basis for describing the delivery of a drug to a patient, the diffusion of that drug through the plasma/body tissue, and the subsequent clearance of the drug from the patient’s system. PK is used to ensure that there is sufficient concentration of the drug to maintain the required efficacy of the drug, while ensuring that the concentration levels remain below the toxic threshold. Pharmacokinetic (PK) models are often combined with Pharmacodynamic (PD) models, which model the positive effects of the drug, such as the binding of a drug to the biological target, and/or undesirable side effects, to form a full PKPD model of the drug-body interaction. This example will only focus on PK, neglecting the interaction with a PD model.

PK enables the following processes to be quantified:

- Absorption

- Distribution

- Metabolism

- Excretion

These are often referred to as ADME, and taken together describe the drug concentration in the body when medicine is prescribed. These ADME processes are typically described by zeroth-order or first-order rate reactions modelling the dynamics of the quantity of drug $q$, with a given rate parameter $k$, for example:

\[ \frac{dq}{dt} = -k^*, \]

\[ \frac{dq}{dt} = -k q. \]

The body itself is modelled as one or more compartments, each of which is defined as a kinetically homogeneous unit (these compartments do not relate to specific organs in the body, unlike Physiologically based pharmacokinetic, PBPK, modeling). There is typically a main central compartment into which the drug is administered and from which the drug is excreted from the body, combined with zero or more peripheral compartments to which the drug can be distributed to/from the central compartment (See Fig 2). Each peripheral compartment is only connected to the central compartment.

The following example PK model describes the two-compartment model shown diagrammatically in the figure above. The time-dependent variables to be solved are the drug quantity in the central and peripheral compartments, $q_c$ and $q_{p1}$ (units: [ng]) respectively.

\[ \frac{dq_c}{dt} = \text{Dose}(t) - \frac{q_c}{V_c} CL - Q_{p1} \left ( \frac{q_c}{V_c} - \frac{q_{p1}}{V_{p1}} \right ), \]

\[ \frac{dq_{p1}}{dt} = Q_{p1} \left ( \frac{q_c}{V_c} - \frac{q_{p1}}{V_{p1}} \right ). \]

This model describes an intravenous bolus dosing protocol, with a linear clearance from the central compartment (non-linear clearance processes are also possible, but not considered here). The dose function $\text{Dose}(t)$ will consist of instantaneous doses of $X$ ng of the drug at one or more time points. The other input parameters to the model are:

- \(V_c\) [mL], the volume of the central compartment

- \(V_{p1}\) [mL], the volume of the first peripheral compartment

- \(CL\) [mL/h], the clearance/elimination rate from the central compartment

- \(Q_{p1}\) [mL/h], the transition rate between central compartment and peripheral compartment 1

We will solve this system of ODEs using the Diffsol crate. Rather than trying to write down the dose function as a mathematical function, we will neglect the dose function from the equations and instead using Diffsol’s API to specify the dose at specific time points.

First lets write down the equations in the standard form of a first order ODE system:

\[ \frac{d\mathbf{y}}{dt} = \mathbf{f}(\mathbf{y}, t) \]

where

\[ \mathbf{y} = \begin{bmatrix} q_c \\ q_{p1} \end{bmatrix} \]

and

\[ \mathbf{f}(\mathbf{y}, t) = \begin{bmatrix} - \frac{q_c}{V_c} CL - Q_{p1} \left ( \frac{q_c}{V_c} - \frac{q_{p1}}{V_{p1}} \right ) \\ Q_{p1} \left ( \frac{q_c}{V_c} - \frac{q_{p1}}{V_{p1}} \right ) \end{bmatrix} \]

We will also need to specify the initial conditions for the system:

\[ \mathbf{y}(0) = \begin{bmatrix} 0 \\ 0 \end{bmatrix} \]

For the dose function, we will specify a dose of 1000 ng at regular intervals of 6 hours. We will also specify the other parameters of the model:

\[ V_c = 1000 \text{ mL}, \quad V_{p1} = 1000 \text{ mL}, \quad CL = 100 \text{ mL/h}, \quad Q_{p1} = 50 \text{ mL/h} \]

Let’s now solve this system of ODEs using Diffsol. To implement the discrete dose events, we set a stop time for the simulation at each dose event using the OdeSolverMethod::set_stop_time method. During timestepping we can check the return value of the OdeSolverMethod::step method to see if the solver has reached the stop time. If it has, we can apply the dose and continue the simulation.

use diffsol::{CraneliftJitModule, OdeBuilder, OdeSolverMethod, OdeSolverStopReason};

use plotly::{common::Mode, layout::Axis, layout::Layout, Plot, Scatter};

use std::fs;

type M = diffsol::NalgebraMat<f64>;

type CG = CraneliftJitModule;

type LS = diffsol::NalgebraLU<f64>;

fn main() {

let problem = OdeBuilder::<M>::new()

.build_from_diffsl::<CG>(

"

Vc { 1000.0 } Vp1 { 1000.0 } CL { 100.0 } Qp1 { 50.0 }

u_i {

qc = 0,

qp1 = 0,

}

F_i {

- qc / Vc * CL - Qp1 * (qc / Vc - qp1 / Vp1),

Qp1 * (qc / Vc - qp1 / Vp1),

}

",

)

.unwrap();

let mut solver = problem.bdf::<LS>().unwrap();

let doses = vec![(0.0, 1000.0), (6.0, 1000.0), (12.0, 1000.0), (18.0, 1000.0)];

let mut q_c = Vec::new();

let mut q_p1 = Vec::new();

let mut time = Vec::new();

// apply the first dose and save the initial state

solver.state_mut().y[0] = doses[0].1;

q_c.push(solver.state().y[0]);

q_p1.push(solver.state().y[1]);

time.push(0.0);

// solve and apply the remaining doses

for (t, dose) in doses.into_iter().skip(1) {

solver.set_stop_time(t).unwrap();

loop {

let ret = solver.step();

q_c.push(solver.state().y[0]);

q_p1.push(solver.state().y[1]);

time.push(solver.state().t);

match ret {

Ok(OdeSolverStopReason::InternalTimestep) => continue,

Ok(OdeSolverStopReason::TstopReached) => break,

_ => panic!("unexpected solver error"),

}

}

solver.state_mut().y[0] += dose;

}

let mut plot = Plot::new();

let q_c = Scatter::new(time.clone(), q_c)

.mode(Mode::Lines)

.name("q_c");

let q_p1 = Scatter::new(time, q_p1).mode(Mode::Lines).name("q_p1");

plot.add_trace(q_c);

plot.add_trace(q_p1);

let layout = Layout::new()

.x_axis(Axis::new().title("t [h]"))

.y_axis(Axis::new().title("amount [ng]"));

plot.set_layout(layout);

let plot_html = plot.to_inline_html(Some("drug-delivery"));

fs::write("../src/primer/images/drug-delivery.html", plot_html).expect("Unable to write file");

}Example: Bouncing Ball

Modelling a bouncing ball is a simple and intuitive example of a system with discrete events. The ball is dropped from a height \(h\) and bounces off the ground with a coefficient of restitution \(e\). When the ball hits the ground, its velocity is reversed and scaled by the coefficient of restitution, and the ball rises and then continues to fall until it hits the ground again. This process repeats until halted.

The second order ODE that describes the motion of the ball is given by:

\[ \frac{d^2x}{dt^2} = -g \]

where \(x\) is the position of the ball, \(t\) is time, and \(g\) is the acceleration due to gravity. We can rewrite this as a system of two first order ODEs by introducing a new variable for the velocity of the ball:

\[ \begin{align*} \frac{dx}{dt} &= v \\ \frac{dv}{dt} &= -g \end{align*} \]

where \(v = \frac{dx}{dt}\) is the velocity of the ball. This is a system of two first order ODEs, which can be written in vector form as:

\[ \frac{d\mathbf{y}}{dt} = \mathbf{f}(\mathbf{y}, t) \]

where

\[ \mathbf{y} = \begin{bmatrix} x \\ v \end{bmatrix} \]

and

\[ \mathbf{f}(\mathbf{y}, t) = \begin{bmatrix} v \\ -g \end{bmatrix} \]

The initial conditions for the ball, including the height from which it is dropped and its initial velocity, are given by:

\[ \mathbf{y}(0) = \begin{bmatrix} h \\ 0 \end{bmatrix} \]

When the ball hits the ground, we need to update the velocity of the ball according to the coefficient of restitution, which is the ratio of the velocity after the bounce to the velocity before the bounce. The velocity after the bounce \(v’\) is given by:

\[ v’ = -e v \]

where \(e\) is the coefficient of restitution. However, to implement this in our ODE solver, we need to detect when the ball hits the ground. We can do this by using Diffsol’s event handling feature, which allows us to specify a function that is equal to zero when the event occurs, i.e. when the ball hits the ground. This function \(g(\mathbf{y}, t)\) is called an event or root function, and for our bouncing ball problem, it is given by:

\[ g(\mathbf{y}, t) = x \]

where \(x\) is the position of the ball. When the ball hits the ground, the event function will be zero and Diffsol will stop the integration, and we can update the velocity of the ball accordingly.

In code, the bouncing ball problem can be solved using Diffsol as follows:

use diffsol::{CraneliftJitModule, OdeBuilder, OdeSolverMethod, OdeSolverStopReason, Vector};

use plotly::{common::Mode, layout::Axis, layout::Layout, Plot, Scatter};

use std::fs;

type M = diffsol::NalgebraMat<f64>;

type CG = CraneliftJitModule;

type LS = diffsol::NalgebraLU<f64>;

fn main() {

let e = 0.8;

let problem = OdeBuilder::<M>::new()

.build_from_diffsl::<CG>(

"

g { 9.81 } h { 10.0 }

u_i {

x = h,

v = 0,

}

F_i {

v,

-g,

}

stop {

x,

}

",

)

.unwrap();

let mut solver = problem.bdf::<LS>().unwrap();

let mut x = Vec::new();

let mut v = Vec::new();

let mut t = Vec::new();

let final_time = 10.0;

// save the initial state

x.push(solver.state().y[0]);

v.push(solver.state().y[1]);

t.push(0.0);

// solve until the final time is reached

solver.set_stop_time(final_time).unwrap();

loop {

match solver.step() {

Ok(OdeSolverStopReason::InternalTimestep) => (),

Ok(OdeSolverStopReason::RootFound(t)) => {

// get the state when the event occurred

let mut y = solver.interpolate(t).unwrap();

// update the velocity of the ball

y[1] *= -e;

// make sure the ball is above the ground

y[0] = y[0].max(f64::EPSILON);

// set the state to the updated state

solver.state_mut().y.copy_from(&y);

solver.state_mut().dy[0] = y[1];

*solver.state_mut().t = t;

}

Ok(OdeSolverStopReason::TstopReached) => break,

Err(_) => panic!("unexpected solver error"),

}

x.push(solver.state().y[0]);

v.push(solver.state().y[1]);

t.push(solver.state().t);

}

let mut plot = Plot::new();

let x = Scatter::new(t.clone(), x).mode(Mode::Lines).name("x");

let v = Scatter::new(t, v).mode(Mode::Lines).name("v");

plot.add_trace(x);

plot.add_trace(v);

let layout = Layout::new()

.x_axis(Axis::new().title("t"))

.y_axis(Axis::new());

plot.set_layout(layout);

let plot_html = plot.to_inline_html(Some("bouncing-ball"));

fs::write("../src/primer/images/bouncing-ball.html", plot_html).expect("Unable to write file");

}DAEs via the Mass Matrix

Differential-algebraic equations (DAEs) are a generalisation of ordinary differential equations (ODEs) that include algebraic equations, or equations that do not involve derivatives. Algebraic equations can arise in many physical systems and often are used to model constraints on the system, such as conservation laws or other relationships between the state variables. For example, in an electrical circuit, the current flowing into a node must equal the current flowing out of the node, which can be written as an algebraic equation.

DAEs can be written in the general implicit form:

\[ \mathbf{F}(\mathbf{y}, \mathbf{y}’, t) = 0 \]

where \(\mathbf{y}\) is the vector of state variables, \(\mathbf{y}’\) is the vector of derivatives of the state variables, and \(\mathbf{F}\) is a vector-valued function that describes the system of equations. However, for the purposes of this primer and the capabilities of Diffsol, we will focus on a specific form of DAEs called index-1 or semi-explicit DAEs, which can be written as a combination of differential and algebraic equations:

\[ \begin{align*} \frac{d\mathbf{y}}{dt} &= \mathbf{f}(\mathbf{y}, t) \\ 0 &= \mathbf{g}(\mathbf{y}, t) \end{align*} \]

where \(\mathbf{f}\) is the vector-valued function that describes the differential equations and \(\mathbf{g}\) is the vector-valued function that describes the algebraic equations. The key difference between DAEs and ODEs is that DAEs include algebraic equations that must be satisfied at each time step, in addition to the differential equations that describe the rate of change of the state variables.

How does this relate to the standard form of an explicit ODE that we have seen before? Recall that an explicit ODE can be written as:

\[ \frac{d\mathbf{y}}{dt} = \mathbf{f}(\mathbf{y}, t) \]

Lets update this equation to include a matrix \(\mathbf{M}\) that multiplies the derivative term:

\[ M \frac{d\mathbf{y}}{dt} = \mathbf{f}(\mathbf{y}, t) \]

When \(M\) is the identity matrix (i.e. a matrix with ones along the diagonal), this reduces to the standard form of an explicit ODE. However, when \(M\) has diagonal entries that are zero, this introduces algebraic equations into the system and it reduces to the semi-explicit DAE equations show above. The matrix \(M\) is called the mass matrix.

Thus, we now have a general form of a set of differential equations, that includes both ODEs and semi-explicit DAEs. This general form is used by Diffsol to allow users to specify a wide range of problems, from simple ODEs to more complex DAEs. In the next section, we will look at a few examples of DAEs and how to solve them using Diffsol and a mass matrix.

Example: Electrical Circuits

Lets consider the following low-pass LRC filter circuit:

+---L---+---C---+

| | |

V_s = R |

| | |

+-------+-------+

The circuit consists of a resistor \(R\), an inductor \(L\), and a capacitor \(C\) connected to a voltage source \(V_s\). The voltage across the resistor \(V\) is given by Ohm’s law:

\[ V = R i_R \]

where \(i_R\) is the current flowing through the resistor. The voltage across the inductor is given by:

\[ \frac{di_L}{dt} = \frac{V_s - V}{L} \]

where \(di_L/dt\) is the rate of change of current with respect to time. The voltage across the capacitor is the same as the voltage across the resistor and the equation for an ideal capacitor is:

\[ \frac{dV}{dt} = \frac{i_C}{C} \]

where \(i_C\) is the current flowing through the capacitor. The sum of the currents flowing into and out of the top-center node of the circuit must be zero according to Kirchhoff’s current law:

\[ i_L = i_R + i_C \]

Thus we have a system of two differential equations and two algebraic equation that describe the evolution of the currents through the resistor, inductor, and capacitor; and the voltage across the resistor. We can write these equations in the general form:

\[ M \frac{d\mathbf{y}}{dt} = \mathbf{f}(\mathbf{y}, t) \]

where

\[ \mathbf{y} = \begin{bmatrix} i_R \\ i_L \\ i_C \\ V \end{bmatrix} \]

and

\[ \mathbf{f}(\mathbf{y}, t) = \begin{bmatrix} V - R i_R \\ \frac{V_s - V}{L} \\ i_L - i_R - i_C \\ \frac{i_C}{C} \end{bmatrix} \]

The mass matrix \(M\) has one on the diagonal for the differential equations and zero for the algebraic equation.

\[ M = \begin{bmatrix} 0 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 \end{bmatrix} \]

Instead of providing the mass matrix explicitly, the DiffSL language specifies the multiplication of the mass matrix with the derivative term, \(M \frac{d\mathbf{y}}{dt}\), which is given by:

\[ M \frac{d\mathbf{y}}{dt} = \begin{bmatrix} 0 \\ \frac{di_L}{dt} \\ 0 \\ \frac{dV}{dt} \end{bmatrix} \]

The initial conditions for the system are:

\[ \mathbf{y}(0) = \begin{bmatrix} 0 \\ 0 \\ 0 \\ 0 \end{bmatrix} \]

The voltage source \(V_s\) acts as a forcing function for the system, and we can specify this as sinusoidal function of time.

\[ V_s(t) = V_0 \sin(\omega t) \]

where \(\omega\) is the angular frequency of the source. Since this is a low-pass filter, we will choose a high frequency for the source, say \(\omega = 200\), to demonstrate the filtering effect of the circuit.

We can solve this system of equations using Diffsol and plot the current and voltage across the resistor as a function of time.

use diffsol::{CraneliftJitModule, MatrixCommon, OdeBuilder, OdeSolverMethod};

use plotly::{common::Mode, layout::Axis, layout::Layout, Plot, Scatter};

use std::fs;

type M = diffsol::NalgebraMat<f64>;

type CG = CraneliftJitModule;

type LS = diffsol::NalgebraLU<f64>;

fn main() {

let problem = OdeBuilder::<M>::new()

.build_from_diffsl::<CG>(

"

R { 100.0 } L { 1.0 } C { 0.001 } V0 { 10 } omega { 100.0 }

Vs { V0 * sin(omega * t) }

u_i {

iR = 0,

iL = 0,

iC = 0,

V = 0,

}

dudt_i {

diRdt = 0,

diLdt = 0,

diCdt = 0,

dVdt = 0,

}

M_i {

0,

diLdt,

0,

dVdt,

}

F_i {

V - R * iR,

(Vs - V) / L,

iL - iR - iC,

iC / C,

}

out_i {

iR,

}

",

)

.unwrap();

let mut solver = problem.bdf::<LS>().unwrap();

let (ys, ts) = solver.solve(1.0).unwrap();

let ir: Vec<_> = ys.inner().row(0).into_iter().copied().collect();

let t: Vec<_> = ts.into_iter().collect();

let ir = Scatter::new(t.clone(), ir).mode(Mode::Lines);

let mut plot = Plot::new();

plot.add_trace(ir);

let layout = Layout::new()

.x_axis(Axis::new().title("t"))

.y_axis(Axis::new().title("current"));

plot.set_layout(layout);

let plot_html = plot.to_inline_html(Some("electrical-circuit"));

fs::write("../src/primer/images/electrical-circuit.html", plot_html)

.expect("Unable to write file");

}Partial Differential Equations (PDEs)

Diffsol is an ODE solver, but it can also solve PDEs. The idea is to discretize the PDE in space and time, and then solve the resulting system of ODEs. This is called the method of lines.

Discretizing a PDE is a large topic, and there are many ways to do it. Common methods include finite difference, finite volume, finite element, and spectral methods. Finite difference methods are the simplest to understand and implement, so some of the examples in this book will demonstrate this method to give you a flavour of how to solve PDEs with Diffsol. However, in general we recommend that you use another package to discretise your PDE, and then import the resulting ODE system into Diffsol for solving.

Some useful packages

There are many packages in the Python and Julia ecosystems that can help you discretise your PDE. Here are a few, but there are many more out there:

Python

- FEniCS: A finite element package. Uses the Unified Form Language (UFL) to specify PDEs.

- FireDrake: A finite element package, uses the same UFL as FEniCS.

- FiPy: A finite volume package.

- scikit-fdiff: A finite difference package.

Julia:

- MethodOfLines.jl: A finite difference package.

- Gridap.jl: A finite element package.

Example: Heat equation

Lets consider a simple example, the heat equation. The heat equation is a PDE that describes how the temperature of a material changes over time. In one dimension, the heat equation is

\[ \frac{\partial u}{\partial t} = D \frac{\partial^2 u}{\partial x^2} \]

where \(u(x, t)\) is the temperature of the material at position \(x\) and time \(t\), and \(D\) is the thermal diffusivity of the material. To solve this equation, we need to discretize it in space and time. We can use a finite difference method to discretise the spatial derivative, and then solve the resulting system of ODEs using Diffsol.

Finite difference method

The finite difference method is a numerical method for discretising a spatial derivative like \(\frac{\partial^2 u}{\partial x^2}\). It approximates this continuous term by a discrete term, in this case the multiplication of a matrix by a vector. We can use this discretisation method to convert the heat equation into a system of ODEs suitable for Diffsol.

We will not go into the details of the finite difference method here but mearly derive a single finite difference approximation for the term \(\frac{\partial^2 u}{\partial x^2}\), or \(u_{xx}\) using more compact notation.

The central FD approximation of \(u_{xx}\) is:

\[ u_{xx} \approx \frac{u(x + h) - 2u(x) + u(x-h)}{h^2} \]

where \(h\) is the spacing between points along the x-axis.

We will discretise \(u_{xx} = 0\) at \(N\) regular points along \(x\) from 0 to 1, given by \(x_1\), \(x_2\), …

+----+----+----------+----+> x

0 x_1 x_2 ... x_N 1

Using this set of points and the discrete approximation, this gives a set of \(N\) equations at each interior point on the domain:

\[ \frac{v_{i+1} - 2v_i + v_{i-1}}{h^2} \text{ for } i = 1…N \]

where \(v_i \approx u(x_i)\)

We will need additional equations at \(x=0\) and \(x=1\), known as the boundary conditions. For this example we will use \(u(x) = g(x)\) at \(x=0\) and \(x=1\) (also known as a non-homogenous Dirichlet bc), so that \(v_0 = g(0)\), and \(v_{N+1} = g(1)\), and the equation at \(x_1\) becomes:

\[ \frac{v_{i+1} - 2v_i + g(0)}{h^2} \]

and the equation at \(x_N\) becomes:

\[ \frac{g(1) - 2v_i + v_{i-1}}{h^2} \]

We can therefore represent the final \(N\) equations in matrix form like so:

\[ \frac{1}{h^2} \begin{bmatrix} -2 & 1 & & & \\ 1 & -2 & 1 & & \\ &\ddots & \ddots & \ddots &\\ & & 1 & -2 & 1 \\ & & & 1 & -2 \end{bmatrix} \begin{bmatrix} v_1 \\ v_2 \\ \vdots \\ v_{N-1}\\ v_{N} \end{bmatrix} + \frac{1}{h^2} \begin{bmatrix} g(0) \\ 0 \\ \vdots \\ 0 \\ g(1) \end{bmatrix} \]

The relevant sparse matrix here is \(A\), given by

\[ A = \begin{bmatrix} -2 & 1 & & & \\ 1 & -2 & 1 & & \\ &\ddots & \ddots & \ddots &\\ & & 1 & -2 & 1 \\ & & & 1 & -2 \end{bmatrix} \]

As you can see, the number of non-zero elements grows linearly with the size \(N\), so a sparse matrix format is much preferred over a dense matrix holding all \(N^2\) elements! The additional vector that encodes the boundary conditions is \(b\), given by

\[ b = \begin{bmatrix} g(0) \\ 0 \\ \vdots \\ 0 \\ g(1) \end{bmatrix} \]

Method of Lines Approximation

We can use our FD approximation of the spatial derivative to convert the heat equation into a system of ODEs. Starting from our original definition of the heat equation:

\[ \frac{\partial u}{\partial t} = D \frac{\partial^2 u}{\partial x^2} \]

and using our finite difference approximation and definition of the sparse matrix \(A\) and vector \(b\), this becomes:

\[ \frac{du}{dt} = \frac{D}{h^2} (A u + b) \]

where \(u\) is a vector of temperatures at each point in space. This is a system of ODEs that we can solve using Diffsol.

Diffsol Implementation

use diffsol::{

CraneliftJitModule, FaerSparseLU, FaerSparseMat, MatrixCommon, OdeBuilder, OdeSolverMethod,

};

use plotly::{

layout::{Axis, Layout},

Plot, Surface,

};

use std::fs;

type M = FaerSparseMat<f64>;

type LS = FaerSparseLU<f64>;

type CG = CraneliftJitModule;

fn main() {

let problem = OdeBuilder::<M>::new()

.build_from_diffsl::<CG>(

"

D { 0.1 }

h { 1.0 / 21.0}

g { 0.0 }

m { 1.0 }

A_ij {

(0..20, 1..21): 1.0,

(0..21, 0..21): -2.0,

(1..21, 0..20): 1.0,

}

b_i {

(0): g,

(1:20): 0.0,

(20): g,

}

u_i {

(0:5): g,

(5:15): g + m,

(15:21): g,

}

heat_i { A_ij * u_j }

F_i {

D * (heat_i + b_i) / (h * h)

}",

)

.unwrap();

let times = (0..100).map(|i| i as f64 / 100.0).collect::<Vec<f64>>();

let mut solver = problem.bdf::<LS>().unwrap();

let sol = solver.solve_dense(×).unwrap();

let x = (1..=20).map(|i| i as f64 / 21.0).collect::<Vec<f64>>();

let y = times;

let z = sol

.inner()

.col_iter()

.map(|v| v.iter().copied().collect::<Vec<f64>>())

.collect::<Vec<Vec<f64>>>();

let trace = Surface::new(z).x(x).y(y);

let mut plot = Plot::new();

plot.add_trace(trace);

let layout = Layout::new()

.x_axis(Axis::new().title("x"))

.y_axis(Axis::new().title("t"))

.z_axis(Axis::new().title("u"));

plot.set_layout(layout);

let plot_html = plot.to_inline_html(Some("heat-equation"));

fs::write("book/src/primer/images/heat-equation.html", plot_html)

.expect("Unable to write file");

}Physics-based Battery Simulation

Traditional battery models are based on equivalent circuit models, similar to the circuit modelled in section Electrical Circuits. These models are simple and computationally efficient, but they lack the ability to capture all of the complex electrochemical processes that occur in a battery. Physics-based models, on the other hand, are based on the electrochemical processes that occur in the battery, and can provide a more detailed description of the battery’s behaviour. They are parameterized by physical properties of the battery, such as the diffusion coefficients of lithium ions in the electrodes, the reaction rate constants, and the surface area of the electrodes, and can be used to predict the battery’s performance under different operating conditions, once these parameters are known.

The Single Particle Model (SPM) is a physics-based model of a lithium-ion battery. It describes the diffusion of lithium ions in the positive and negative electrodes of the battery over a 1D radial domain, assuming that the properties of the electrodes are uniform across the thickness of the electrode. Here we will describe the equations that govern the SPM, and show how to solve them at different current rates to calculate the terminal voltage of the battery.

The Single Particle Model state equations

The SPM model only needs to solve for the concentration of lithium ions in the positive and negative electrodes, \(c_n\) and \(c_p\). The diffusion of lithium ions in each electrode particle \(c_i\) is given by:

\[ \frac{\partial c_i}{\partial t} = \nabla \cdot (D_i \nabla c_i) \]

subject to the following boundary and initial conditions:

\[ \left.\frac{\partial c_i}{\partial r}\right\vert_{r=0} = 0, \quad \left.\frac{\partial c}{\partial r}\right\vert_{r=R_i} = -j_i, \quad \left.c\right\vert_{t=0} = c^0_i \]

where \(c_i\) is the concentration of lithium ions in the positive (\(i=n\)) or negative (\(i=p\)) electrode, \(D_i\) is the diffusion coefficient, \(j_i\) is the interfacial current density, and \(c^0_i\) is the concentration at the particle surface.

The fluxes of lithium ions in the positive and negative electrodes \(j_i\) are dependent on the applied current \(I\):

\[ j_n = \frac{I}{a_n \delta_n F \mathcal{A}}, \qquad j_p = \frac{-I}{a_p \delta_p F \mathcal{A}}, \]

where \(a_i = 3 \epsilon_i / R_i\) is the specific surface area of the electrode, \(\epsilon_i\) is the volume fraction of active material, \(\delta_i\) is the thickness of the electrode, \(F\) is the Faraday constant, and \(\mathcal{A}\) is the electrode surface area.

Output variables for the Single Particle Model

Now that we have defined the equations to solve, we turn to the output variables that we need to calculate from the state variables \(c_n\) and \(c_p\). The terminal voltage of the battery is given by:

\[ V = U_p(x_p^s) - U_n(x_n^s) + \eta_p - \eta_n \]

where \(U_i\) is the open circuit potential (OCP) of the electrode, \(x_i^s = c_i(r=R_i) / c_i^{max}\) is the surface stoichiometry, and \(\eta_i\) is the overpotential.

Assuming Butler-Volmer kinetics and \(\alpha_i = 0.5\), the overpotential is given by:

\[ \eta_i = \frac{2RT}{F} \sinh^{-1} \left( \frac{j_i F}{2i_{0,i}} \right) \]

where the exchange current density \(i_{0,i}\) is given by:

\[ i_{0,i} = k_i F \sqrt{c_e} \sqrt{c_i(r=R_i)} \sqrt{c_i^{max} - c_i(r=R_i)} \]

where \(c_e\) is the concentration of lithium ions in the electrolyte, and \(k_i\) is the reaction rate constant.

Stopping conditions

We wish to terminate the simulation if the terminal voltage exceeds an upper threshold \(V_{\text{max}}\) or falls below a lower threshold \(V_{\text{min}}\). Diffsol uses a root-finding algorithm to detect when the terminal voltage crosses these thresholds, using the following stopping conditions:

\[ V_{\text{max}} - V = 0, \qquad V - V_{\text{min}} = 0, \]

Solving the Single Particle Model using Diffsol

The equations above describe the Single Particle Model of a lithium-ion battery, but they are relativly complex and difficult to discretise compared with the simple heat equation PDE that we saw in the Heat Equation section.

Rather than derive and write down the discretised equations outselves, we will instead rely on the PyBaMM library to generate the equations for us. PyBaMM is a Python library that can generate a wide variety of physics-based battery models, using different parameterisations, physics and operating conditions. Combined with a tool that takes a PyBaMM model and writes it out in the DiffSL language, we can generate a DiffSL file that can be used to solve the equations of the SPM model described above. We can then use the Diffsol crate to solve the model and calculate the terminal voltage of the battery over a range of current rates.

The code below reads in the DiffSL file, compiles it, and then solves the equation for different current rates. We wish to stop the simulation when either the final time is reached, or when one of the stopping conditions is met. We will output the terminal voltage of the battery at regular intervals during the simulation, because the terminal voltage can change more rapidly than the state variables \(c_n\) and \(c_p\), particularly during the “knee” of the discharge curve.

The discretised equations result in sparse matrices, so we use the sparse matrix and linear solver modules provided by the faer crate to solve the equations efficiently.

use diffsol::{

CraneliftJitModule, FaerSparseLU, FaerSparseMat, FaerVec, NonLinearOp, OdeBuilder,

OdeEquations, OdeSolverMethod, OdeSolverStopReason, Vector,

};

use plotly::{common::Mode, layout::Axis, layout::Layout, Plot, Scatter};

use std::fs;

type M = FaerSparseMat<f64>;

type V = FaerVec<f64>;

type LS = FaerSparseLU<f64>;

type CG = CraneliftJitModule;

fn main() {

let file = std::fs::read_to_string("../src/primer/src/spm.ds").unwrap();

let mut problem = OdeBuilder::<M>::new()

.p([1.0])

.build_from_diffsl::<CG>(&file)

.unwrap();

let currents = vec![0.6, 0.8, 1.0, 1.2, 1.4];

let final_time = 3600.0;

let delta_t = 3.0;

let mut plot = Plot::new();

for current in currents {

problem

.eqn

.set_params(&V::from_vec(vec![current], *problem.context()));

let mut solver = problem.bdf::<LS>().unwrap();

let mut v = Vec::new();

let mut t = Vec::new();

// save the initial output

let mut out = problem

.eqn

.out()

.unwrap()

.call(solver.state().y, solver.state().t);

v.push(out[0]);

t.push(0.0);

// solve until the final time is reached

// or we reach the stop condition

solver.set_stop_time(final_time).unwrap();

let mut next_output_time = delta_t;

let mut finished = false;

while !finished {

let curr_t = match solver.step() {

Ok(OdeSolverStopReason::InternalTimestep) => solver.state().t,

Ok(OdeSolverStopReason::RootFound(t)) => {

finished = true;

t

}

Ok(OdeSolverStopReason::TstopReached) => {

finished = true;

final_time

}

Err(_) => panic!("unexpected solver error"),

};

while curr_t > next_output_time {

let y = solver.interpolate(next_output_time).unwrap();

problem

.eqn

.out()

.unwrap()

.call_inplace(&y, next_output_time, &mut out);

v.push(out[0]);

t.push(next_output_time);

next_output_time += delta_t;

}

}

let voltage = Scatter::new(t, v)

.mode(Mode::Lines)

.name(format!("current = {current} A"));

plot.add_trace(voltage);

}

let layout = Layout::new()

.x_axis(Axis::new().title("t [sec]"))

.y_axis(Axis::new().title("voltage [V]"));

plot.set_layout(layout);

let plot_html = plot.to_inline_html(Some("battery-simulation"));

fs::write("../src/primer/images/battery-simulation.html", plot_html)

.expect("Unable to write file");

}Forward Sensitivity Analysis

Recall our general ODE system (we’ll define it without the mass matrix for now):

\[ \begin{align*} \frac{dy}{dt} &= f(t, y, p) \\ y(t_0) &= y_0 \end{align*} \]

Solving this system gives us the solution \(y(t)\) for a given set of parameters \(p\). However, we often want to know how the solution changes with respect to the parameters (e.g. for model fitting). This is where forward sensitivity analysis comes in. If we take the derivative of the ODE system with respect to the parameters, we get the sensitivity equations:

\[ \begin{align*} \frac{d}{dt} \frac{dy}{dp} &= \frac{\partial f}{\partial y} \frac{dy}{dp} + \frac{\partial f}{\partial p} \\ \frac{dy}{dp}(t_0) &= \frac{dy_0}{dp} \end{align*} \]

Here, \(\frac{dy}{dp}\) is the sensitivity of the solution with respect to the parameters. The sensitivity equations are solved alongside the ODE system to give us the solution and the sensitivity of the solution with respect to the parameters. Note that this is a similar concept to forward-mode automatic differentiation, but whereas automatic differentiation calculates the derivative of the code itself (e.g. the “discretised” ODE system), forward sensitivity analysis calculates the derivative of the continuous equations before they are discretised. This means that the error control for forward sensitivity analysis is decoupled from the forward solve, and the tolerances for both can be set independently. However, both methods have the same scaling properties as the number of parameters increases, each additional parameter requires one additional solve, so the method is not efficient for large numbers of parameters (>100). In this case, adjoint sensitivity analysis is often preferred.

To use forward sensitvity analysis in Diffsol, more equations need to be specified that calculate the gradients with respect to the parameters. If you are using the OdeBuilder struct and rust closures, you need to supply additional closures that calculate the gradient of the right-hand side, and the gradient of the initial state vector with respect to the parameters. You can see an example of this in the Forward Sensitivity API section. If you are using the DiffSL language, these gradients are calculated automatically and you don’t need to worry about them. An example of using forward sensitivity analysis in DiffSL is given in the Fitting a Preditor-Prey Model to Data section next.

Fitting a Predator-Prey Model to data

In this example we will again use the Lotka-Volterra equations, which are described in more detail in the Population Dynamics - Predator-Prey Model example.

The equations are

\[ \frac{dx}{dt} = a x - b x y \\ \frac{dy}{dt} = c x y - d y \]

We also have initial conditions for the populations at time \(t = 0\).

\[ x(0) = x0 \\ y(0) = y0 \]

This model has six parameters, \(a, b, c, d, x0, y0\). For the purposes of this example, we’ll fit two of these parameters \(b, d)\) to some synthetic data. We’ll use the model itself to generate the synthetic data, so we’ll know the true values of the parameters to verify the fitting process.

We’ll use the argmin crate to perform the optimisation. This is a popular rust crate that contains a number of optimisation algorithms. It does have some limitations, such as the lack of support for constraints, so it may not be suitable for many real ODE fitting problems as the solver can easily fail to converge if the parameter vector moves into a difficult region (e.g. the Lotka-Volterra model only makes sense for positive values of the parameters). However, it will be sufficient for this example to demonstrate the sensitivity analysis capabilities of diffsol.

First of all we will need to implement some of the argmin traits to specify the optimisation problem. We’ll create a struct Problem and implement the CostFunction and Gradient traits for it. The Problem struct will hold our synthetic data (held by the ys_data and ts_data fields) and the OdeSolverProblem that we’ll use to solve the ODEs. We’ll also create some type aliases for the nalgebra and diffsol types we’ll be using.

Note that the problem field of the Problem struct is wrapped in a RefCell so that we can mutate it in the cost and gradient methods. Setting the parameters of the ODE solver problem is a mutable operation (i.e. you are changing the equations), so we need to use RefCell and interior mutability to do this.

use argmin::{

core::{observers::ObserverMode, CostFunction, Executor, Gradient},

solver::{linesearch::MoreThuenteLineSearch, quasinewton::LBFGS},

};

use argmin_observer_slog::SlogLogger;

use diffsol::{

DiffSl, MatrixCommon, OdeBuilder, OdeEquations, OdeSolverMethod, OdeSolverProblem, Op,

SensitivitiesOdeSolverMethod, Vector,

};

use std::cell::RefCell;

type M = diffsol::NalgebraMat<f64>;

type V = diffsol::NalgebraVec<f64>;

type T = f64;

type LS = diffsol::NalgebraLU<f64>;

type CG = diffsol::LlvmModule;

type Eqn = DiffSl<M, CG>;

struct Problem {

ys_data: M,

ts_data: Vec<T>,

problem: RefCell<OdeSolverProblem<Eqn>>,

}

The argmin CostFunction trait requires an implementation of the cost method, which will calculate the sum-of-squares difference between the synthetic data and the model output. Since the argmin crate does not support constraints, we’ll return a large value if the ODE solver fails to converge.

impl CostFunction for Problem {

type Output = T;

type Param = Vec<T>;

fn cost(&self, param: &Self::Param) -> Result<Self::Output, argmin_math::Error> {

let mut problem = self.problem.borrow_mut();

let ctx = *problem.eqn().context();

problem

.eqn_mut()

.set_params(&V::from_vec(param.clone(), ctx));

let mut solver = problem.bdf::<LS>().unwrap();

let ys = match solver.solve_dense(&self.ts_data) {

Ok(ys) => ys,

Err(_) => return Ok(f64::MAX / 1000.),

};

let loss = ys

.inner()

.column_iter()

.zip(self.ys_data.inner().column_iter())

.map(|(a, b)| (a - b).norm_squared())

.sum::<f64>();

Ok(loss)

}

}The argmin Gradient trait requires an implementation of the gradient method, which will calculate the gradient of the cost function with respect to the parameters. Our sum-of-squares cost function can be written as

\[ \text{loss} = \sum_i (y_i(p) - \hat{y}_i)^2 \]

where \(y_i(p)\) is the model output as a function of the parameters \(p\), and \(\hat{y}_i\) is the observed data at time index \(i\). Threrefore, the gradient of this cost function with respect to the parameters is

\[ \frac{\partial \text{loss}}{\partial p} = 2 \sum_i (y_i(p) - \hat{y}_i) \cdot \frac{\partial y_i}{\partial p} \]

where \(\frac{\partial y_i}{\partial p}\) is the sensitivity of the model output with respect to the parameters. We can calculate this sensitivity using the solve_dense_sensitivities method of the ODE solver. The gradient of the cost function is then the sum of the dot product of the residuals and the sensitivities for each time point. Again, if the ODE solver fails to converge, we’ll return a large value for the gradient.

impl Gradient for Problem {

type Gradient = Vec<T>;

type Param = Vec<T>;

fn gradient(&self, param: &Self::Param) -> Result<Self::Gradient, argmin_math::Error> {

let mut problem = self.problem.borrow_mut();

let ctx = *problem.eqn().context();

problem

.eqn_mut()

.set_params(&V::from_vec(param.clone(), ctx));

let mut solver = problem.bdf_sens::<LS>().unwrap();

let (ys, sens) = match solver.solve_dense_sensitivities(&self.ts_data) {

Ok((ys, sens)) => (ys, sens),

Err(_) => return Ok(vec![f64::MAX / 1000.; param.len()]),

};

let dlossdp = sens

.into_iter()

.map(|s| {

s.inner()

.column_iter()

.zip(

ys.inner()

.column_iter()

.zip(self.ys_data.inner().column_iter()),

)

.map(|(si, (yi, di))| 2.0 * (yi - di).dot(&si))

.sum::<f64>()

})

.collect::<Vec<f64>>();

Ok(dlossdp)

}

}

With these implementation out of the way, we can now perform the fitting problem. We’ll generate some synthetic data using the Lotka-Volterra equations with some true parameters, and then fit the model to this data. We’ll use the LBFGS solver from the argmin crate, which is a quasi-Newton method that uses the Broyden-Fletcher-Goldfarb-Shanno (BFGS) update formula. We’ll also use the SlogLogger observer to log the progress of the optimisation.

We’ll initialise the optimizer a short distance away from the true parameter values, and then check the final optimised parameter values against the true values.

pub fn main() {

let code = "

in_i { b = 4.0/3.0, d = 1.0 }

a { 2.0/3.0 } c { 1.0 } x0 { 1.0 } y0 { 1.0 }

u_i {

y1 = x0,

y2 = y0,

}

F_i {

a * y1 - b * y1 * y2,

c * y1 * y2 - d * y2,

}

";

let (b_true, d_true) = (4.0 / 3.0, 1.0);

let t_data = (0..101)

.map(|i| f64::from(i) * 40. / 100.)

.collect::<Vec<f64>>();

let problem = OdeBuilder::<M>::new()

.p([b_true, d_true])

.sens_atol([1e-6])

.sens_rtol(1e-6)

.build_from_diffsl(code)

.unwrap();

let ys_data = {

let mut solver = problem.bdf::<LS>().unwrap();

solver.solve_dense(&t_data).unwrap()

};

let cost = Problem {

ys_data,

ts_data: t_data,

problem: RefCell::new(problem),

};

let init_param = vec![b_true - 0.1, d_true - 0.1];

let linesearch = MoreThuenteLineSearch::new().with_c(1e-4, 0.9).unwrap();

let solver = LBFGS::new(linesearch, 7);

let res = Executor::new(cost, solver)

.configure(|state| state.param(init_param))

.add_observer(SlogLogger::term(), ObserverMode::Always)

.run()

.unwrap();

// print result

println!("{}", res);

// Best parameter vector

let best = res.state().best_param.as_ref().unwrap();

println!("Best parameter vector: {:?}", best);

println!("True parameter vector: {:?}", vec![b_true, d_true]);

}Feb 03 13:16:44.604 INFO L-BFGS

Feb 03 13:16:44.842 INFO iter: 0, cost: 21.574177406963013, best_cost: 21.574177406963013, cost_count: 6, gradient_count: 7, gamma: 1, time: 0.238573908

Feb 03 13:16:44.920 INFO iter: 1, cost: 0.6811721224055488, best_cost: 0.6811721224055488, cost_count: 8, gradient_count: 10, time: 0.077082344, gamma: 0.00036901099013336356

Feb 03 13:16:44.969 INFO iter: 2, cost: 0.6478536174002669, best_cost: 0.6478536174002669, cost_count: 9, gradient_count: 12, time: 0.049218286, gamma: 0.00017983731521908368

Feb 03 13:16:45.018 INFO iter: 3, cost: 0.5515637814971768, best_cost: 0.5515637814971768, cost_count: 10, gradient_count: 14, time: 0.049264513, gamma: 0.00013404417466199433

Feb 03 13:16:45.069 INFO iter: 4, cost: 0.2889819270908579, best_cost: 0.2889819270908579, cost_count: 11, gradient_count: 16, time: 0.050718659, gamma: 0.00019004425867568796

Feb 03 13:16:45.120 INFO iter: 5, cost: 0.06441855702549167, best_cost: 0.06441855702549167, cost_count: 12, gradient_count: 18, gamma: 0.0005522578375180803, time: 0.05102388

Feb 03 13:16:45.172 INFO iter: 6, cost: 0.001969603423448309, best_cost: 0.001969603423448309, cost_count: 13, gradient_count: 20, time: 0.051874014, gamma: 0.002084311472606979

Feb 03 13:16:45.224 INFO iter: 7, cost: 0.00018682781933202676, best_cost: 0.00018682781933202676, cost_count: 14, gradient_count: 22, gamma: 0.00020342834067043386, time: 0.051705468

Feb 03 13:16:45.276 INFO iter: 8, cost: 0.0000004145187755175781, best_cost: 0.0000004145187755175781, cost_count: 15, gradient_count: 24, time: 0.052326582, gamma: 0.00013653160933392906

Feb 03 13:16:45.328 INFO iter: 9, cost: 0.00000019683422285908518, best_cost: 0.00000019683422285908518, cost_count: 16, gradient_count: 26, time: 0.05250708, gamma: 0.0002897867622209216

Feb 03 13:16:45.381 INFO iter: 10, cost: 0.00000018573267264008743, best_cost: 0.00000018573267264008743, cost_count: 17, gradient_count: 28, time: 0.052503364, gamma: 0.0006940640307853263

Feb 03 13:16:47.047 INFO iter: 11, cost: 0.0000001857326722089365, best_cost: 0.0000001857326722089365, cost_count: 74, gradient_count: 86, gamma: 0.00012163138683631344, time: 1.665703414

Feb 03 13:16:47.531 INFO iter: 12, cost: 0.00000018573267291060315, best_cost: 0.0000001857326722089365, cost_count: 90, gradient_count: 103, gamma: 0.000001225820261527594, time: 0.484634742

Feb 03 13:16:49.078 INFO iter: 13, cost: 0.000000185732672314337, best_cost: 0.0000001857326722089365, cost_count: 143, gradient_count: 157, time: 1.5466635069999999, gamma: 0.00000005014738728081247

Feb 03 13:16:49.562 INFO iter: 14, cost: 0.00000018573267355654775, best_cost: 0.0000001857326722089365, cost_count: 159, gradient_count: 174, gamma: 0.00000386732692846693, time: 0.483722743

Feb 03 13:16:51.051 INFO iter: 15, cost: 0.0000001857326728225398, best_cost: 0.0000001857326722089365, cost_count: 210, gradient_count: 226, time: 1.489475966, gamma: -0.00000029042697044185633

Feb 03 13:16:51.880 INFO iter: 16, cost: 0.00000018573267387423262, best_cost: 0.0000001857326722089365, cost_count: 238, gradient_count: 255, time: 0.828862625, gamma: 0.0000014262616055754604

Feb 03 13:16:53.202 INFO iter: 17, cost: 0.00000018573267376814762, best_cost: 0.0000001857326722089365, cost_count: 283, gradient_count: 301, gamma: 0.00000006448722374243354, time: 1.32160584

OptimizationResult:

Solver: L-BFGS

param (best): [1.3333663799297248, 1.0000008551827637]

cost (best): 0.0000001857326722089365

iters (best): 11

iters (total): 18

termination: Solver converged

time: 8.62392681s

Best parameter vector: [1.3333663799297248, 1.0000008551827637]

True parameter vector: [1.3333333333333333, 1.0]

So, we’ve successfully fitted the Lotka-Volterra model to some synthetic data and recovered the original true parameters. This is a simple example and could easily be improved. For example, you will note from the output that the argmin crate is calling both the cost and gradient functions, and this is often done using the exact same parameter vector. Ideally we’d like to cache the results of the solve_dense_sensitivities method and reuse them in both the cost and gradient functions.

Backwards Sensitivity Analysis

Backwards sensitivity analysis, as the name suggests, starts from a given cost or loss function that you want to minimise, and derives the gradient of this function with respect to the parameters of the ODE systems using a lagrangian multiplier approach.

Diffsol supports two different classes of loss functions, the first being an integral of a model output function \(g(t, u)\) over time,

$$ G(p) = \int_0^{t_f} g(t, u) dt $$

The second being a sum of \(n\) discrete functions \(h_i(t, u)\) at time points \(t_i\),

$$ G(p) = \int_0^{t_f} \sum_{i=1}^n h_i(t_i, u) \delta(t - t_i) dt $$

Note that the \(h_i\) functions can be a combination of the (continuous) model output function \(g\) and a user-defined discrete function, such as the sum of squares difference between the model output and some observed data.

The derivation below is modified from that given in (Rackauckas et. al. 2021), and uses the first form of the cost function, but the same approach can be used for the second form.

Lagrangian Multiplier Approach

We wish to minimise the cost function \(G(p)\) with respect to the parameters \(p\), and subject to the constraits of the ODE system of equations,

$$ M \frac{du}{dt} = f(t, u, p) $$

where \(M\) is the mass matrix, \(u\) is the state vector, and \(f\) is the right-hand side function. We can write the lagrangian as

$$ L(u, \lambda, p) = G(p) + \int_0^{t_f} \lambda^T (M \frac{du}{dt} - f(t, u, p)) $$

where \(\lambda\) is the lagrangian multiplier. We already know we can generate a solution to the ODE system \(u(t)\) that will satisfy the constaint such that the last term in the lagrangian is zero, meaning that the gradient of the lagrangian is equal to the gradient of the cost function. Therefore, we can write the gradient of the cost function as

$$ \frac{dG}{dp} = \int_0^{t_f} (g_p + g_u u_p) dt - \int_0^{t_f} \lambda^T (M u \frac{du_p}{dt} - f_u u_p - f_p) dt $$

where \(g_p\) and \(g_u\) are the partial derivatives of the output function \(g\) with respect to the parameters and state variables, respectively, and \(u_p\) is the partial derivative of the state vector with respect to the parameters, also known as the sensitivities.

This equation can be simplified by using integration by parts, and requiring that the adjoint ODE system is satisfied, which is given by

$$ \begin{aligned} M \frac{d \lambda}{dt} &= -f_u^T \lambda - g_u^T \\ \lambda(t_f) &= 0 \end{aligned} $$

giving the gradient of the cost function as

$$ \frac{dG}{dp} = \lambda^T(0) M u_p(0) + \int_0^{t_f} (g_p + \lambda^T f_p) dt $$

Solving the Adjoint ODE System

Solving the adjoint ODE system is done in two stages. First, we solve the forward ODE system to get the state vector \(u(t)\). We require this solution to be valid the entire time interval \([0, t_f]\), so we use a checkpointing system to store the state vector at regular intervals in interpolate between them to get the state vector at any time point. The second stage is to solve the adjoint ODE system backwards in time, starting from the final time point \(t_f\) and using the interpolated state vector to supply \(u(t)\) as needed.

The gradient \(\frac{dG}{dp}\) can be calculated by performing a quadrature over the time interval \([0, t_f]\) to calculate the last term in the equation above. Special consideration needs to be taken for the second form of the cost function above, where the discrete functions are evaluated at specific time points. In this case, the solver will need to be stopped at each time point and the contribution \(M^{-1} g_u^T\) added to the state vector \(\lambda\), and the contribution \(g_p\) added to the gradient \(\frac{dG}{dp}\).

In the case that \(M\) is singular, but can be divided into a singular and zero blocks like so:

$$ M = \begin{bmatrix} M_{11} & 0 \\ 0 & 0 \end{bmatrix} $$

where \(M_{11}\) is invertible. The corresponding block decomposition of the adjoint Jacobian and the partial derivative of the output function can be written as

$$ f_u^T = \begin{bmatrix} f_{dd} & f_{da} \\ f_{ad} & f_{aa} \end{bmatrix} $$

and

$$ g_u^T = \begin{bmatrix} g_{d} & g_{a} \end{bmatrix}. $$

In this case, the contribution to \(\lambda\) can be calculated as

$$ -f_{da} f_{aa}^{-1} g_{a} + M_{11}^{-1} g_{d} $$

Specifying the discrete functions

Here we consider the second form of the cost function. If we have a model output function \(m(t, u)\), and a set of discrete functions \(h_i(t, u)\) which only depend on the model output (i.e. \(h_i(t, u) = h_i(m(t, u))\)), then the partial derivatives of the cost function \(g\) with respect to the parameters can be calculated as

$$ \begin{aligned} g_u &= g_m m_u \\ g_p &= g_m m_p \end{aligned} $$

where \(m_u\) and \(m_p\) are the partial derivatives of the model output function with respect to the state variables and parameters, respectively. Therefore, a user only has to supply \(g_m\) at each of the time points \(t_i\) and Diffsol will be able to calculate the correct gradients as part of the backwards solution of the adjoint ODE system.

Example: Fitting a spring-mass model to data

In this example we’ll fit a damped spring-mass system to some synthetic data (using the model to generate the data). The system consists of a mass \(m\) attached to a spring with spring constant \(k\), and a damping force proportional to the velocity of the mass with damping coefficient \(c\).

\[ \begin{align*} \frac{dx}{dt} &= v \\ \frac{dv}{dt} &= -\frac{k}{m} x - \frac{c}{m} v \end{align*} \]

where \(v = \frac{dx}{dt}\) is the velocity of the mass.

We’ll use the argmin crate to perform the optimisation. To hold the synthetic data and the model, we’ll create a struct Problem like so

use argmin::{

core::{observers::ObserverMode, CostFunction, Executor, Gradient},

solver::{linesearch::MoreThuenteLineSearch, quasinewton::LBFGS},

};

use argmin_observer_slog::SlogLogger;

use diffsol::{

AdjointOdeSolverMethod, DenseMatrix, DiffSl, Matrix, MatrixCommon, NalgebraMat, NalgebraVec,

OdeBuilder, OdeEquations, OdeSolverMethod, OdeSolverProblem, OdeSolverState, Op, Scale, Vector,

VectorCommon, VectorViewMut,

};

use std::cell::RefCell;

type M = NalgebraMat<f64>;

type V = NalgebraVec<f64>;

type T = f64;

type LS = diffsol::NalgebraLU<f64>;

type CG = diffsol::LlvmModule;

type Eqn = DiffSl<M, CG>;

struct Problem {

ys_data: M,

ts_data: Vec<T>,

problem: RefCell<OdeSolverProblem<Eqn>>,

}To use argmin we need to specify traits giving the loss function and its gradient. In this case we’ll define a loss function equal to the sum of squares error between the model output and the synthetic data.

$$ \text{loss} = \sum_i (y_i(p) - \hat{y}_i)^2 $$

where \(y_i(p)\) is the model output as a function of the parameters \(p\), and \(\hat{y}_i\) is the observed data at time index \(i\).

impl CostFunction for Problem {

type Output = T;

type Param = Vec<T>;

fn cost(&self, param: &Self::Param) -> Result<Self::Output, argmin_math::Error> {

let mut problem = self.problem.borrow_mut();

let context = *problem.eqn().context();

problem

.eqn_mut()

.set_params(&V::from_vec(param.clone(), context));

let mut solver = problem.bdf::<LS>().unwrap();

let ys = match solver.solve_dense(&self.ts_data) {

Ok(ys) => ys,

Err(_) => return Ok(f64::MAX / 1000.),

};

let loss = ys

.inner()

.column_iter()

.zip(self.ys_data.inner().column_iter())

.map(|(a, b)| (a - b).norm_squared())

.sum::<f64>();

Ok(loss)

}

}The gradient of this cost function with respect to the model outputs \(y_i\) is

$$ \frac{\partial \text{loss}}{\partial y_i} = 2 (y_i(p) - \hat{y}_i) $$

We can calculate this using Diffsol’s adjoint sensitivity analysis functionality. First we solve the forwards problem, generating a checkpointing struct. Using the forward solution we can then calculate \(\frac{\partial loss}{\partial y_i}\) for each time point, and then pass this into the adjoint backwards pass to calculate the gradient of the cost function with respect to the parameters.

impl Gradient for Problem {

type Gradient = Vec<T>;

type Param = Vec<T>;

fn gradient(&self, param: &Self::Param) -> Result<Self::Gradient, argmin_math::Error> {

let mut problem = self.problem.borrow_mut();

let context = *problem.eqn().context();

problem

.eqn_mut()

.set_params(&V::from_vec(param.clone(), context));

let mut solver = problem.bdf::<LS>().unwrap();

let (c, ys) = match solver.solve_dense_with_checkpointing(&self.ts_data, None) {

Ok(ys) => ys,

Err(_) => return Ok(vec![f64::MAX / 1000.; param.len()]),

};

let mut g_m = M::zeros(2, self.ts_data.len(), *problem.eqn().context());

for j in 0..g_m.ncols() {

let g_m_i = (ys.column(j) - self.ys_data.column(j)) * Scale(2.0);

g_m.column_mut(j).copy_from(&g_m_i);

}

let adjoint_solver = problem.bdf_solver_adjoint::<LS, _>(c, Some(1)).unwrap();

match adjoint_solver.solve_adjoint_backwards_pass(self.ts_data.as_slice(), &[&g_m]) {

Ok(soln) => Ok(soln.as_ref().sg[0]

.inner()

.iter()

.copied()

.collect::<Vec<_>>()),

Err(_) => Ok(vec![f64::MAX / 1000.; param.len()]),

}

}

}In our main function we’ll create the model, generate some synthetic data, and then call argmin to fit the model to the data.

pub fn main() {

let (k_true, c_true) = (1.0, 0.1);

let t_data = (0..101)

.map(|i| f64::from(i) * 40. / 100.)

.collect::<Vec<f64>>();

let problem = OdeBuilder::<M>::new()

.p([k_true, c_true])

.sens_atol([1e-6])

.sens_rtol(1e-6)

.out_atol([1e-6])

.out_rtol(1e-6)

.build_from_diffsl(

"

in_i { k = 1.0, c = 0.1 }

m { 1.0 }

u_i {

x = 1,

v = 0,

}

F_i {

v,

-k/m * x - c/m * v,

}

",

)

.unwrap();

let ys_data = {

let mut solver = problem.bdf::<LS>().unwrap();

solver.solve_dense(&t_data).unwrap()

};

let cost = Problem {

ys_data,

ts_data: t_data,

problem: RefCell::new(problem),

};

let init_param = vec![k_true - 0.1, c_true - 0.01];